AI’s increasing energy appetite

Generative AI tools use more energy than you might expect. OHIO experts explore the hidden energy costs of AI.

Gabe Preston '06 | November 8, 2024

Share:

Have a big presentation coming up and want to use AI to help you prepare? Or maybe you’re just curious about what a California coastal scene would look like with giant unicorns running on the beach, so you put an AI tool to the test.

But before you keep feeding your favorite generative artificial intelligence (GenAI) tool prompts, here’s something to consider: ChatGPT’s daily energy use is equal to 180,000 U.S. households—daily. That’s according to a recent study shared by Forbes. It’s an eyebrow-raising statistic that puts GenAI’s current energy drain in perspective. And while GenAI is still a relatively recent technology, experts are watching this energy-gobbling trend tick upward, with no signs of it slowing down.

Fueling the future of artificial intelligence is a tall(er) order

Researchers put energy consumption into three main buckets: Training, inference and computing hardware requirements, with training being the main energy drain. GenAI models with larger training parameters, such as GPT-4, the parent model of ChatGPT, require more data. GenAI behemoths aside, researchers have determined that even training a medium-sized generative AI model used 626,000 tons of CO2 emissions — or the same CO2 emissions as driving five average American cars through their lifetimes.

Daniel Karney, OHIO associate professor of economics, specializes in energy and environmental policy and shares a surprising statistic.

“The average ChatGPT query needs 10 times as much electricity to process relative to a Google search,” he says.

According to the U.S. Department of Energy , data centers are 10-50 times more energy intensive per square foot than conventional commercial building spaces. This makes GenAI energy use an issue that needs to be addressed, and quickly, for those who worry about sustainability and GenAI’s potential pull on existing electrical infrastructure.

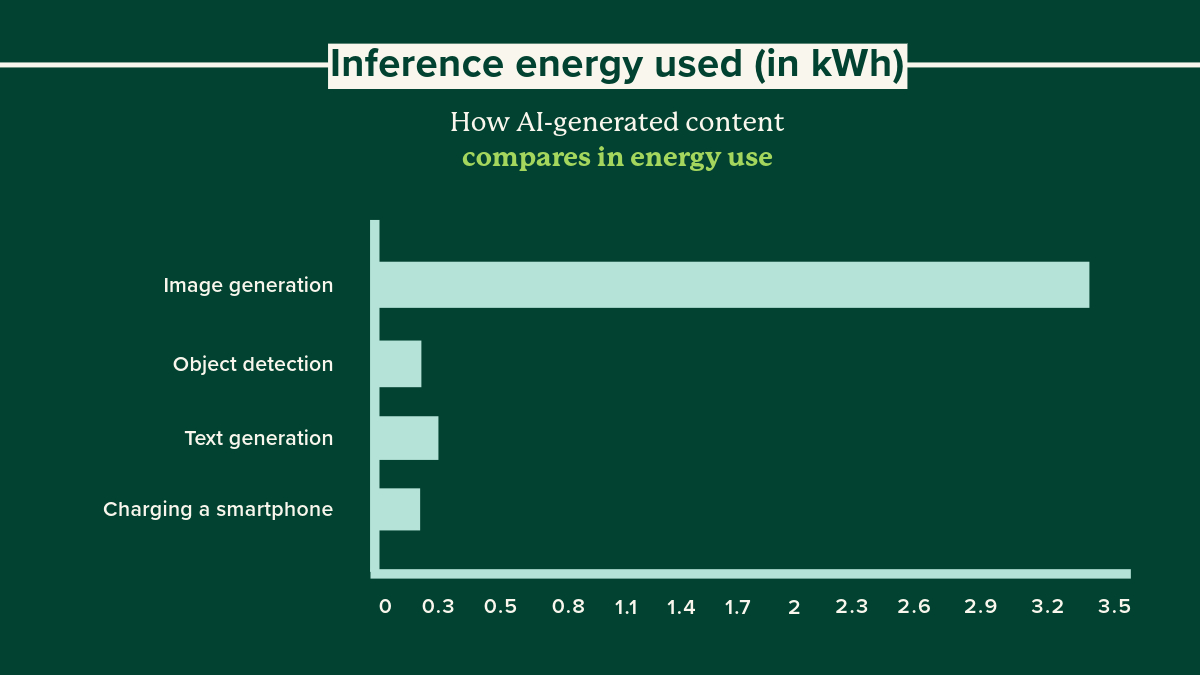

Yet, GenAI doesn’t require energy evenly across the board. When broken down by use case, there’s one significant outlier that’s quickly become one of the worst energy-draining culprits: Image generation.

Image generation tends to be a much larger energy requirement than any other function, so much so that experts estimate that creating even just one image with GenAI uses the same amount of energy as charging your phone. While that might not sound like much, consider that the most popular GenAI tools are being used millions if not billions of times a day.

Data source for graph: https://arxiv.org/pdf/2311.16863

Planning for a more efficient Large Language Model

Training a GenAI and requiring a large amount of energy isn’t the whole picture. Also part of the issue, and potentially the solution, is the hardware. Data centers contain servers, which produce heat as they process information. The bigger the data center, the more heat emitted. Additionally, the greater the demand for information processing (such as GenAI instead of a normal Google search), the more heat emitted. Understandably, the data centers being used to manage AI workloads are generating a lot of heat.

Ohio University Loehr Professor Greg Kremer, an expert in both engineering as well as energy conservation, offered his thoughts on how to improve AI’s energy efficiency.

“We can develop better ways to use the heat generated by AI servers housed in data centers. Combined heat and power systems, where processes that require heat are located near processes that generate heat, offer one way to utilize data center heat waste and increase system-level efficiency. For this approach to work with data centers, innovations are needed to collect, concentrate and boost the heat energy to useful levels.”

When considering the benefits of GenAI with the costs of building and running the models, Kremer added, “The peak electrical demand [is what] drives capacity. It could be interesting to require large AI data centers to install large grid-storage capability to help manage the electrical supply/demand in the grid and try to mitigate environmental impacts in part through load transfer.”

Kremer explained the benefits of load transfer in simpler terms.

“If large electrical users (like AI data centers) installed grid storage of a magnitude similar to their electricity needs during the grid's peak hours, they could capitalize on the fact that there is excess capacity on the electrical grid at off-peak hours, store it, then release it during periods of peak demand on the grid so that they do not add to the required peak capacity of the grid. This would reduce the need for additional power generation sources to power AI data centers,” Kremer said.

Experts like Kremer and Karney understand AI’s energy use forecast for the next decade is significant.

“Projections are that data centers will consume 3-4 percent of annual worldwide electricity by the end of the decade. Carbon dioxide emissions associated with data center electricity use is set to double from 2022 to 2030,” Karney said.

While this increase is concerning, he noted there are other ways to mitigate the energy drain from large language models.

“Since U.S. electricity generation is still fossil-fuel based, then more demand typically leads to more carbon emissions,” Karney says. “However, if data center owners paid to install renewable sources of electricity to offset their increased demand, then the impact on overall ca rbon emissions would be significantly reduced.”

Thankfully, there are some industry experts already working toward a sustainable solution to GenAI’s rising energy needs. The Green AI Institute was established for just that purpose. Describing itself as a “collective of visionary researchers, academics, and professionals”, they have devoted their mission to advancing the integration of artificial intelligence and environmental sustainability. Aside from authoring a white paper which examines the escalating environmental impact of AI and data centers, they hosted the Green AI Summit in October, one of the first of its kind.

Consumers may also breathe a little easier knowing utilities are also making strides toward lowering the energy demand from data centers. In October, AEP Ohio announced new data centers would need to "pay for at least 85% of the electricity they say they need each month, even if they use less, to cover the cost of infrastructure needed to bring electricity to those facilities."

What's next for sustainable growth in GenAI?

While initiatives like the Green AI Institute are a step in the right direction, a consensus hasn’t been reached regarding the best way to curb GenAI’s growing energy appetite. Most experts agree that the industry needs to come together to create a more sustainable growth model. In the meantime, the concept of artificial intelligence continues to evolve with new use cases occurring all the time:

- Artificial General Intelligence (AGI): This idea suggests that AI might one day be able to outperform humans in a wide range of activities and intellectual pursuits. Some experts think the timing of this new marvel could be as soon as 2033.

- Embedded AI: Embedding artificial intelligence into an enterprise tool to improve processes and productivity is already occurring to some degree, but the next level of AI autonomy may represent a more intuitive web experience for visitors. With an embedded AI at the helm, content could be constantly customized and streamlined to connect with more of the right customers with the right message, a lofty but often missed goal for marketers and advertisers.

- AI agents: Think Microsoft Copilot’s meeting summary is helpful now? Imagine that same summary, except customized for you because it knows what’s most important to your role at the company. AI agents could also be trained to manage your personal daily communications like texts and emails. They are one of the hottest buzz words in artificial intelligence now because this technology isn’t as distant a reality as some of the other future AI endeavors.

- Personal Large Action Model (PLAMs) and Corporate Large Action Models (CLAMs): An advanced version of an AI Agent, they’re machines that collect data from our personal lives and begin taking over tasks to make our lives easier and more carefree. For adults, that may look like a digital personal assistant negotiating the price of a flight you need to take next spring, or offering investment advice based on your specific portfolio needs. For children, that may even become a machine that keeps them company, protects them from dangers when out in public, or assists them during an emergency.

Generating a solution to the AI energy grab

By optimizing algorithms, improving hardware and creating industry forums where experts can establish industry best practices by utilizing renewable energy sources, harnessing the potential of generative AI without compromising environmental goals could be the next logical step for the industry. As AI continues to evolve, finding sustainable solutions will be crucial for its long-term adoption and integration into our everyday lives. In the meantime, maybe we can reconsider asking ChatGPT to create an image of giant unicorns running on the beach.