ChatGPT and the Colossal Cave Adventure

Like many people, Bruce Tong, an instructor and MITS alumnus, has been dabbling with ChatGPT and was looking to get a little experience with the ChatGPT Python Application Programming Interface (API). Bruce wanted to use a Python program to interact with ChatGPT. For that, he needed some reason for a Python program to require predictive text.

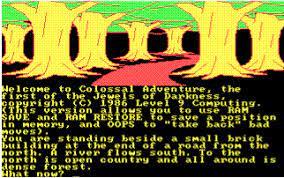

This is where the Colossal Cave Adventure entered the picture. It is a text-based game dating back to 1976, commonly found on Unix systems. It was freely available for Linux, and Bruce knew he could make it available as a network service using another classic Unix service called "xinetd." Among xinetd's features is the ability to turn a program that reads from standard input and writes to standard output files and turn it into a networked service. Nearly all command-line applications do this. By pairing Colossal Cave Adventure with xinetd, the classic adventure game becomes accessible via a network and even on the Internet.

The next step was to write a Python script that could connect to, and exchange text with, the Colossal Cave Adventure. In effect, what was needed was a telnet client written in Python. It sounds like a lot of work, but it is largely just reading and writing text to a "socket," which is kind of like reading and writing to a file but is instead a network connection. Bruce prompted ChatGPT to write this script, saying "Write a Python script to open a TCP socket and send and receive text." The resulting script would do so but only for one transaction. A further prompt was needed: "Modify the script so that sending and receiving data are done in a loop until Control-C is typed." Further prompts had ChatGPT modify the structure of the script, breaking more things into isolated functions and moving hard-coded values into variables. There was a complication in that the Colossal Cave Adventure sends one letter per packet, so the script needed to implement its own buffering instead of being able to rely on the operating system or the runtime environment to do that.

All of those modifications were applied via ChatGPT prompts but were in preparation for the next step, which was asking ChatGPT to add in the ChatGPT API so that the script could act as a middle-man between the game and ChatGPT. This next step is when the script's job is to take text from the game and send it to ChatGPT and then take ChatGPT's responses and send them to the game. In this way, ChatGPT would play the game. ChatGPT delivered, inserting the necessary calls to its own API.

At this point, the script was getting large enough that continuing to ask ChatGPT to make the changes was becoming cumbersome. The script needed numerous small tweaks and lots of debugging to iron out the interface between the two systems. For instance, ChatGPT likes to use complete sentences, but the game expects two-word commands. ChatGPT uses mixed case and punctuation; the game does not. And, as a sign of issues to come, ChatGPT version 3.5 liked to forget its initial instructions and would start apologizing to the game for issuing unknown commands. Those apologies would, of course, also be unknown commands, leading to an endless loop of polite but unproductive communication.

This brings us to what Bruce found to be the most interesting aspect of the effort. Scripts must manage the conversation's history. The various versions of ChatGPT all have a limited buffer size. Later versions have larger buffers than earlier versions. This means they can deal with longer conversation histories, but all of them have limits. And, processing a larger conversation consumes more "tokens," costing more. At scale, those costs could become significant.

In Python, a conversation is a List of Dictionaries. Consider the following:

conversation = [

{ "role" : "system", "content" : "YOU'RE PLAYING THE GAME ADVENTURE." },

{ "role" : "system", "content" : "COMMANDS ARE ONE OR TWO WORDS." },

{ "role" : "user", "content" : "WELCOME TO ADVENTURE..." },

{ "role" : "assistant", "content" : "go north" }

]

The "system" role is used to give ChatGPT instructions. The "user" role is what the user wants to send to ChatGPT. In this case, the game is the user. The "assistant" role is what ChatGPT has replied. As the conversation takes place, your program likely keeps adding to this list, so the list eventually grows to be larger than ChatGPT's buffer. Then ChatGPT starts to ignore parts of the conversation. Your script must include something to manage the conversation. You might, for instance, treat your list as a Queue, or a First-In First-Out (FIFO) data structure, but that will eventually mean your instructions to ChatGPT are removed from the conversation. Managing the growth of the list might involve not leaving error conditions in the conversation because they may not assist ChatGPT. You may have to reassemble the conversation for each transaction to be able to keep your instructions to ChatGPT as part of the conversation.

So what was the end effect of ChatGPT 3.5 playing the Colossal Cave Adventure? ChatGPT mostly just wandered around the woods picking up and dropping objects. Remember, it is a predictive text system and does not formulate strategy or even recognize there are objectives in the game. It is very much like the "Infinite Monkey Theorem," which suggests that a monkey hitting keys on a keyboard for an infinite time will eventually type the complete works of Shakespeare.

As for using ChatGPT to write Python scripts, it is a handy tool. ChatGPT is unable to write larger programs with many requirements. It will be better at writing targeted functions to satisfy well-written prompts. The better you can communicate like a programmer, the more likely ChatGPT will deliver what you want. How do you learn to communicate like a programmer? You study programming. Terminology can be taught. How do you assemble larger programs from ChatGPT functions? Experience. You need to have written programs yourself and come to an understanding about how best to design and integrate the associated logic. That's something you have to teach yourself, but it can be coached.